3 Lessons Learned From Testing Hundreds Of Onboarding Emails

We set out to build a better onboarding experience. Here’s what we found.

It didn’t matter.

None of the marketing in the world — the redesigned site, the conversion hacks, the blog — meant a thing if we couldn’t get users to stick around.

With a trial-to-customer conversion rate of just over 8%, we had to do better.

Having spent *a lot *of time and effort rebuilding our marketing site from scratch with great results, we suspected that we’d have to take a completely new approach.

As we’d learned from our site redesign, asking “how can we get users to stick around?” (or, in the case of the site, “how do we get them to convert?”) was the wrong way.

We had to ask: “how can we serve our users better?“

How can we make their trial so good, and the value they get from it so high, that becoming a customer will be the obvious choice?

Part of that is on the product side: we’ve been focusing hard on our onboarding user experience since we started, and we’re continuing to test a variety of changes.

We *know *that the product *can *deliver massive value to users, because we see it every day.

But we also know that helping a user bridge the gap between sign-up and high engagement can have a *huge *impact on how much value they get from the app, and, in the end, whether or not they convert.

Changing Our Perspective

In the past, if you had asked me why we send onboarding emails to our trial users, I would have said, without missing a beat, “to get them to use Groove.”

It seemed so obvious.

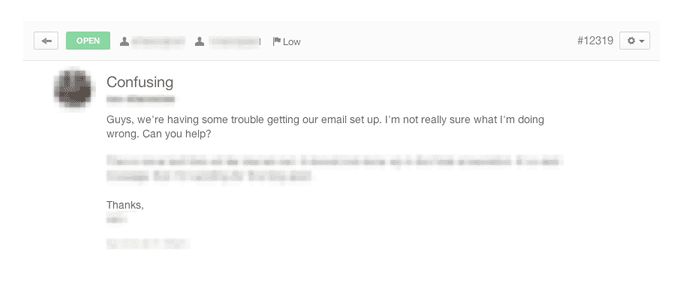

And if you look at the emails we used to send, it *was *obvious:

Useless product tidbits that had little to do with what the user actually wanted.

Our metrics showed it, too: average open rates hovered around 28% for the first email. Great for marketing to prospects, *terrible *for a someone who signed up for your product within the last five minutes.

The painstaking process of redesigning our site with a focus on what the *customer *wants — and not what *we *want them to do — was absolutely transformative.

It changed the way we thought about our business.

And it was time to apply those changes to our onboarding emails, too.

In our redesign research, just like we had asked probing questions about our customers’ experience using our product, we had also asked a number of questions about our customers’ onboarding experiences.

We asked about their first login experience.

We asked about their learning experience.

We asked about the emails we had been sending, and how useful (or useless) they had been.

We learned *a lot. *Especially about how bad our onboarding emails were.

In talking to our users: *they simply don’t care *about “getting more out of their Groove account.”

They care about *real *things.

Happier customers.

More efficient workflows for their team.

*That’s *what those emails had to deliver. And so that’s what we set out to do.

Takeaway: There could be a huge gap between what you’re telling your customers, and what they want — or *need *– to hear. The only way to find out the truth is surprisingly simple: ask.

The Three Biggest Wins From Our

Onboarding Optimization

During the process of writing our new emails, we tested *hundreds *of different emails, some with only very minor tweaks. Subjects, bodies, to/from fields, calls to action and more.

And we tracked the results closely.

Open rates, click-through rates, engagement, conversion from trial, retention.

We ended up with many thousands of data points, but only four *clear *wins.

By far, four key improvements had the biggest impact on how much value our users got, and ultimately, on trial-to-customer conversions.

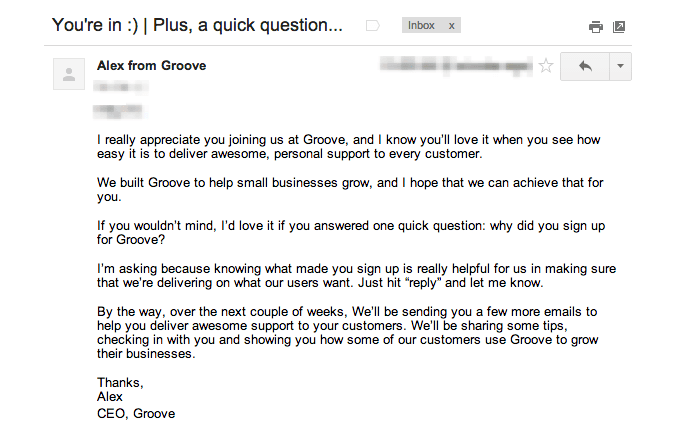

1) “You’re In” Email

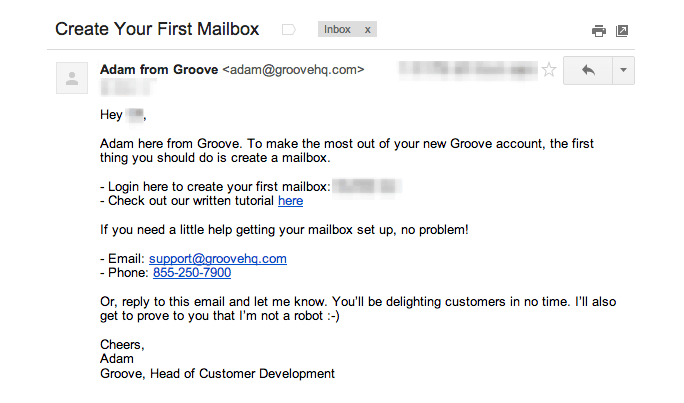

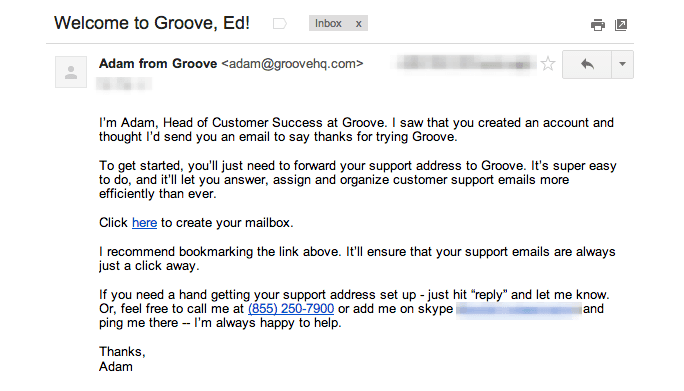

This email is the flagship of our onboarding sequence. It’s the first thing anyone who signs up for Groove gets in their inbox.

It asks a simple question: why did you sign up for Groove?

With a 41% response rate, we get *massive *amounts of qualitative marketing data about the “decision triggers” that drive people to sign up for Groove.

Aside from that, this email accomplishes three important things:

It establishes a relationship between the customer and me (the CEO).

Emails that came directly from me, rather than from a nameless Groove account, performed better across the board. We learned that when users know that they have a direct line to the CEO, they feel more connected to Groove, and are less likely to quit if they hit a snag.

It helps us identify any unique needs that the user may have.

Knowing the specific reason a user signed up for Groove helps our team customize our interactions with that user to ensure that the product is perfect for them.

If they signed up because Zendesk is too complicated, we focus on walking them through processes that they’ll recognize from Zendesk that are simpler to do on Groove.

If they signed up because their team is growing rapidly, we’ll focus on showing them how to easily add and onboard new employees.

It sets the stage for what’s coming.

What’s noticeably missing from this email is any sort of product-related call-to-action (e.g., “go download our mobile app”).

That’s very much on purpose.

In our testing, we found that product emails immediately after signup went largely ignored.

This may be because our in-app walkthrough, which we spent *a lot *of time improving, is good enough to keep the user engaged in their first session.

However, we did find that product emails help later on in the process, and that adding the note letting the user know that we’ll be sending those emails actually increased open rates for the messages that followed by about 8%.

Takeaway: Counterintuitively, a product-focused message was *not *the best performing post-signup email. A different approach has given us invaluable insight for marketing, support and building relationships with our customers.

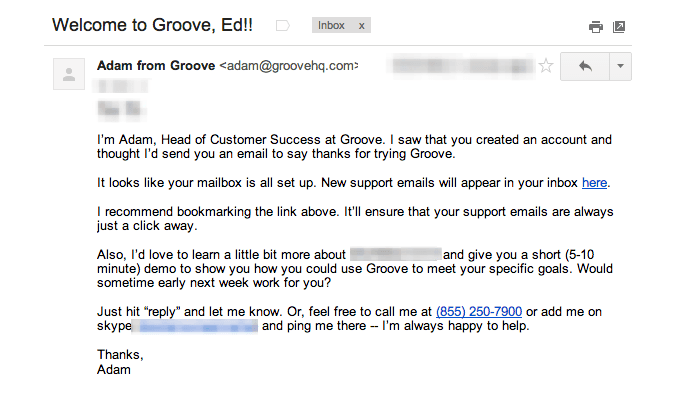

2) Behavioral Triggers

Our old email drip was “standard,” in that every single user got the same 14-day sequence.

A user who logs in three times per day and is highly engaged from day one would get the same exact emails as a user who never logged in after their first session.

This was bad.

Those two users are of *completely *different mindsets, and they’re at totally different points in the trial cycle. Sending advanced-level product emails to disengaged users is like asking “would you like fries with that?” before a customer even steps up to the counter.

We tested customizing the email sequence based on user behavior, and it turned out to be one of the best things we did.

At first, we did this manually. It was painstaking, but the results made it clear that the effort was worth pursuing (and automating). End-of-trial conversions increased, on average, by 10% or more in most of our cohorts.

Now, a user might get an email like this on the third day of their trial:

Or, they might get this one:

Or one of several others.

In our 14-day sequence that, for most users, includes six total emails, we have 22 different messages that go out based on user behavior.

Takeaway: Startups stress over hyper-targeted marketing, but once a user signs up, we treat them all the same. Two people using your product can still have very different needs, and your onboarding emails should reflect that.

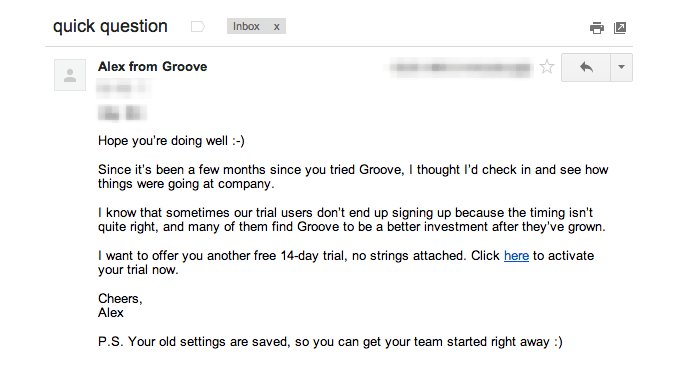

3) The Winback

Another mistake we were making was abandoning the users who abandoned Groove.

We had a 14-day sequence that coincided with our 14-day trial, and that was it.

If the user didn’t convert, they’d stop hearing from us.

As we learned in talking to some of those users, including quite a few who ended up becoming customers later on, we were leaving customers on the table.

We heard, more than a few times, sentiments like “I liked Groove, we just weren’t ready for it.”

Just because Groove wasn’t right for a customer when they signed up, doesn’t mean that we’ll *never *be right for them.

So we began testing winback emails at 7, 21 and 90 days after a user didn’t convert.

The 90-day email has converted at around 2%.

That’s not impressively high, and we’re still working on it, but that’s 2% that wouldn’t be Groove customers right now otherwise.

Takeaway: Not every customer quits because they dislike your product. There could very well come a time in the near future that you’re both perfect for each other, but you’ll never know unless you reach out to them.

How To Apply This To Your Business

As always, this is not a comprehensive guide; it’s simply a snapshot of what’s working for us right now.

And while what works for us may not necessarily work for you, hopefully you can use what we’ve learned to learn about what approach would for *your *unique customers.

To be sure, this is also not a “how we did it” success story. Our email sequence is very much a work in progress, and we continue to run new tests and learn new things every week.

But we’ve been amazed by what we’ve learned so far. And by applying some of these tests to your own onboarding emails, my hope is that you’ll be amazed, too.